BioByte 122: OpenAI postulates on AI's future capabilities in biology, STATE predicts cellular responses to perturbation, in vivo CAR T generation, and AlphaGenome tackles genetic predictions

Welcome to Decoding Bio’s BioByte: each week our writing collective highlight notable news—from the latest scientific papers to the latest funding rounds—and everything in between. All in one place.

Penultimate reminder to sign up to join us for a reception to close out our third annual AI x Bio Summit on July 22! Enjoy an evening of wine and conversation beneath the lights of the storied New York Stock Exchange trading floor as we reflect, connect, and celebrate. It’s a chance to engage with fellow founders, researchers, and operators shaping the future of biology and technology. Spaces are filling up fast so please register to reserve your spot here. We can’t wait to see you there!

What we read

Blogs

Preparing for future AI capabilities in biology [OpenAI, June 2025]

OpenAI has released their approach to establishing safeguards when it comes to the biological capabilities of their models:

Training the model to refuse or safely respond to harmful requests: those that are explicitly harmful or enable bioweaponization, and avoiding responses that provide actionable steps for dual use requests (such as virology).

Detection systems: detect and alert about risky or suspicious bio-related activity. Model responses will be blocked and human review is initiated when needed.

Monitoring and enforcement: misuse will result in suspension of accounts, and may notify law enforcement in extreme cases.

Red teaming: teams of experts try to break OpenAI’s safety mitigations to bypass their defenses end-to-end. These are both biology and red-teaming experts. The biology experts are necessary as they are not experienced in exploiting model vulnerabilities. Pairing them up leads to the most sophisticated red teaming.

Security controls: protecting model weights to mitigate exfiltration risk is a priority.

Medical AI can transform medicine — but only if we carefully track the data it touches [Akhil Vaid, Nature, June 2025]

The complexity of biology lends itself to convoluted datasets that are difficult to parse. Predictive modeling, usually done with supervised machine learning models, is one increasingly popular approach being employed to address this challenge. These predictive models are given labeled datasets, then tasked with building correlations between trends in the data and the outcomes. Successful models have the potential to increase efficacious resource use, but those that over or under predict risk can yield wasted time and money. Validating model performance against new datasets is one way to measure a model’s usefulness, but new, untainted datasets are increasingly difficult to come by, largely because of the predictions that come from the models themselves.

For example, if a model predicts with strong certainty that a patient will go septic, doctors will be able to preemptively treat the patient and prevent onset. If this outcome is subsequently used to train the model, the model sees that strong signals for sepsis ultimately result in no development of the infection, tainting the previously established correlation. Situations like this are far more common than ideal, especially as the database used to train many models—the electronic health record (EHR)—is fed data from outcomes that likely used AI in the initial diagnosis. The gradual inclusion of more data with false associations can lead to model drift, a phenomenon where a model’s predictions become less accurate. The rate of model drift is also exacerbated when multiple models are at play, as a prediction and subsequent treatment based on one model could render the other obsolete.

One potential solution comes from the steel industry. Since the advent of nuclear weapons, all steel contains some amount of radioactive material. This contamination makes them unsuited for use in radiation-sensitive instruments like a Geiger counter, so the steel workers will instead harvest old steel from products like ships that were built before radiation was ubiquitous. For these predictive models, the ‘untainted steel’ is clean, unaltered datasets. However, producing these untainted, gold-standard datasets would potentially result in depriving patients the essential care afforded by the model. Another proposed solution is having practitioners systematically record in which cases models were used, effectively annotating the data to not be used to train the model. Despite these challenges, it is clear that predictive modeling will eventually help enable greater diagnostic success. The field has a long ways to go to get there, but the potential benefit is too great to miss out on.

Papers

Predicting cellular responses to perturbation across diverse contexts with STATE [Adduri et al., preprint, June 2025]

In February 2025, the Arc Institute released their Virtual Cell Atlas of 300 million cells, including a significant external contribution from Tahoe (formerly Vevo Therapeutics) of the open source Tahoe-100M dataset that captures 60,000 drug-cell interactions. Indeed, the ability to model how perturbations like drugs affect cells is of critical importance to drug discovery efforts. This week, the Arc Institute and collaborators have released STATE, a model that can accurately predict the effect of perturbations on cells while accounting for inherent biological noise and experimental variation. Furthemore, they have built a new set of benchmarks called CELL-EVAL to evaluate both STATE and other methods on more meaningful perturbation tasks, setting the stage for new models that can better represent the dynamic behaviors of cells.

STATE is capable of reasoning over various scales from genes, to cells, and populations. The model achieves this through the use of two main modules - the State Embedding (SE) model and the State Transition (ST) model. The SE module is tasked with learning cell-level embeddings that can capture subtle effects of perturbations while also accounting for inherent biological heterogeneity and experimental variation within samples. Separately, the ST module uses a transformer architecture to reason over either raw gene counts or learned SE embeddings to parse perturbation effects across cells or populations. STATE was compared against multiple previous state-of-the-art models like scVI and scGP, as well as simpler linear models. Apart from achieving superior results on baseline cell-type generalization tasks, STATE also demonstrated significant gains over baseline models when tested on specific perturbation datasets like Tahoe-100M and a cytokine signaling perturbation dataset from Parse Biosciences.

Notably, the authors also showcased STATE’s ability to successfully predict perturbation effects over baseline models. Focusing on the C32 melanoma cell line (which was not present in STATE’s training data) in response to the drug Trametinib, STATE significantly outperformed baseline models in predicting differentially expressed genes and log-fold changes. Baseline models simply scored most genes in C32 with high significance, demonstrating that they were unable to identify true perturbation signals from noise when using rudimentary averaging approaches. Furthemore, STATE was also able to predict downstream phenotypic effects like cell survival when fine tuned with a regression model in contrast to baselines. These studies demonstrate the ability of STATE to provide accurate perturbation predictions on unseen data for tangibly useful tasks. The authors note that STATE has not yet been evaluated on wholly unseen datasets. It will be interesting to see how STATE might perform on new perturb-seq datasets like the recently released X-Atlas/Orion from Xaira Therapeutics. However, it is clear that the model is a significant step towards meaningfully integrating perturbation based data into a useful platform to facilitate faster and more accurate in silico drug discovery tasks.

AlphaGenome: advancing regulatory variant effect prediction with a unified DNA sequence model [Avsec et al., preprint, June 2025]

While it is safe to say that models like AlphaFold have solved the protein structure prediction problem, the same cannot yet be said for currently available methods for probing the intricacies of genomes. Indeed, a few months after Google DeepMind won half of the 2024 Nobel Prize in Chemistry for the AlphaFold model series, vice president of research at DeepMind, Pushmeet Kohli, laid out the company’s next ambitious target in the biological space. In a January 2025 interview, Kohli remarked that his team is keen to “understand the semantics for DNA, [and] to know what happens with the problems involving variants of unknown significance.” Now with the release of AlphaGenome, aimed at predicting various genomic properties and associated variant effects, DeepMind has made its next big step towards that goal.

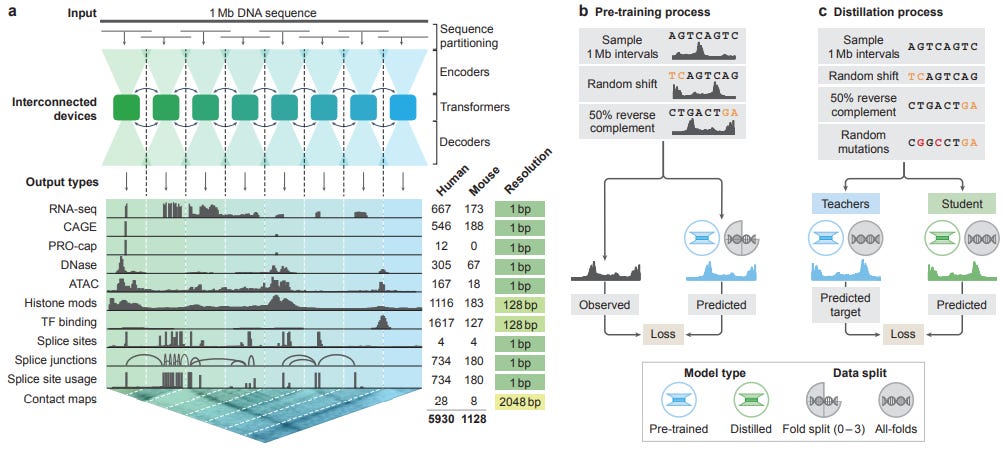

Given the breadth of experimental methods used to probe genomes, uncover regulatory mechanisms within, and quantify variant effect, there is a range of sequence-to-function models that predict “genome tracks” like gene expression, chromatin accessibility, and splice sites. While previous models are either highly specialized for one task while restricting input length or generalist long-context models that sacrifice resolution, AlphaGenome is the first to operate at the single base-pair level, while being able to handle inputs of up to one million bases, and provide accurate predictions across a range of genome tracks and data modalities. The model achieves these capabilities using architecture themes similar to those found in AlphaFold3. AlphaGenome uses a dual-representation scheme, where sequences can be either a one-dimensional embedding that preserves local information or two-dimensional embeddings to handle interactions with other elements. The authors use a series of convolutional layers to extract local sequence information before using larger transformer blocks to capture distal effects and interactions between linear elements. Also similar to AlphaFold3, the model makes significant use of a pre-training and knowledge distillation scheme. Here, “all-folds” models (referred to as a teacher model) is trained on all available data, and a single “student” model is then trained to mimic the outputs of the teachers. This allows for the development of a lightweight, efficient model that still matches the performance of much larger precursors.

With a model meant to tackle so many modalities, the AlphaGenome team evaluated their model on a suite of twenty-four genome track tasks, as well as twenty four variant effect tasks. The authors reported that the model outperformed previous state-of-the-art methods on 22/24 benchmarks for both genome tracks and variant effect. While the task-by-task advances in performance were certainly notable, perhaps the most notable, complete evaluation of AlphaGenome was the virtual screen of the TAL1 gene in relation to T-cell acute lymphoblastic leukemia (T-ALL). T-ALL is a cancer caused by gain-of-function mutations to the TAL1 gene; to that end, the authors probed three known mutations and were able to show that various genetic track predictions correlated with known biological causes of the disease. For example, the model predicted an increase in activating histone marks for a mutation in a neo-enhancer region, indicating that the region of DNA was generally more accessible. Furthemore, it also predicted a decrease in repressive histone marks at the transcription start site and increased active transcription marks across the whole gene sequence, which is consistent with the known gain-of-function behavior. Furthemore, the authors expanded their study to all known mutations for TAL1 and showed the model could discriminate between oncogenic and non-oncogenic variants. Finally, the model was also able to capture the effects of transcription factor motifs inserted via mutagenesis, and correlate that to increased expression of the gene.

AlphaGenome’s multimodal prediction capabilities, combined with its single base pair resolution and long context capabilities position it as a foundational tool for eventual clinical genetics applications. However, the authors do note that the current model does struggle with broader tissue-specific context tasks, and is also applicable only to human and mouse genomes (though how much of a practical limitation this is can be debated). Furthemore, the model does not currently have the ability to reason over more niche modalities like methylation and structure, which are known to also play significant roles in genomic regulation. Finally, the model is not yet capable of doing any inference for personalized medicine (referred to as personal genome prediction in the paper) as of yet, which is perhaps where it is most needed. Nonetheless, AlphaGenome’s release heralds a new tool for scientists to use in their pursuit of rapid computational experimentation, diagnostics, and genomic medicine breakthroughs.

In vivo CAR T cell generation to treat cancer and autoimmune disease [Hunter et al., Science, June 2025]

In 2022, researchers from the University of Pennsylvania published results demonstrating the first use of LNP + mRNA-based technology to develop CAR-T in vivo in mouse models to treat fibrosis. Since then, the work has spun out into Capstan Therapeutics (to the tune of $340M), and this week they published preclinical results using LNP + mRNA-based in vivo CAR in monkeys for B cell depletion. This is a crucial positive indicator towards moving into human clinical trials, where in vivo CAR-T can potentially bring the efficacy of CAR-T therapy at the price of mass-manufactured LNP-packaged mRNA.

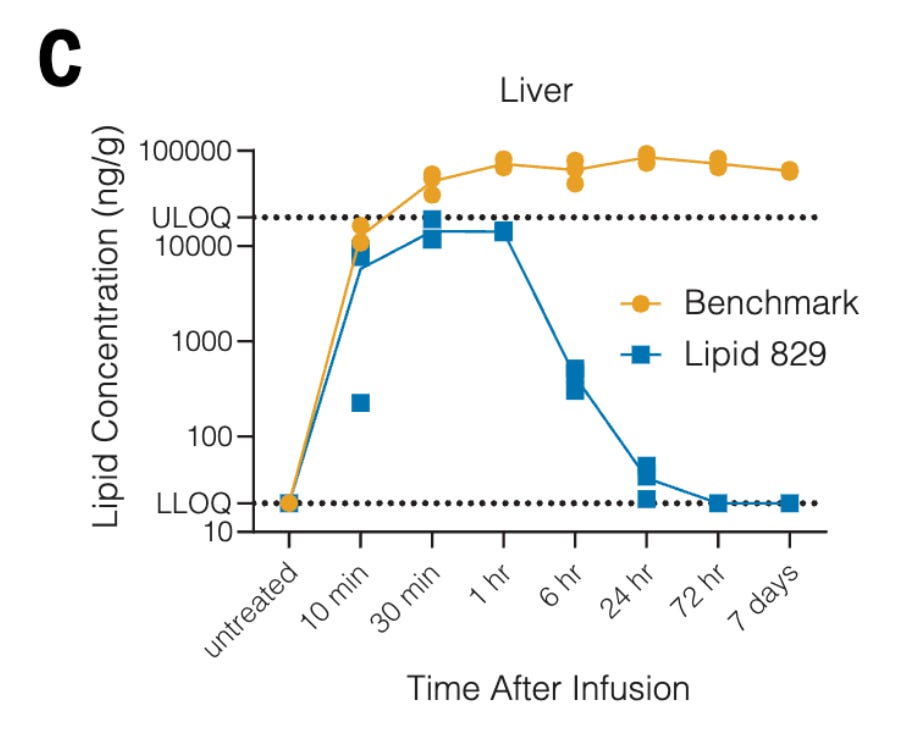

The primary challenge with in vivo CAR-T is delivering the CAR construct specifically to the targets of interest. Lipid nanoparticles (LNPs) generally accumulate in the liver. Hunter et al. screened cationic lipids for their LNP formulation, eventually choosing Lipid 829 (L829) which showed fast clearance from the liver. When testing in mouse models, they found the combination of L829 and a CD5 antibody attached to the LNPs were able to effectively detarget the liver while targeting T-cells in the spleen. One critical parameter they were aiming for was also to not target CD4+ cells, which have been found to drive CAR-T adverse events. They also optimized the codons and UTR of the mRNA, demonstrating in a tumor challenge setting that greater numbers of CAR molecules on each cell led to improved efficacy.

In one step closer to human studies, they tested their LNP + mRNA formulation for the targeting of B-cells in a species of monkey called cynomolgus monkeys. This can be used as a proof of concept for treatment of autoimmune disease as well as B-cell lymphomas, although they didn’t explicitly model autoimmune disease nor B-cell lymphoma in the monkey models. What they did successfully show was CD8+ T-cell-driven depletion of B-cells when using the LNP + mRNA formulation dosed once every 3 days over a 9-day period, successfully resetting the B-cell population to a “naive” state.

Blending simulation and abstraction for physical reasoning [Sosa et al., Cognition, January 2025]

How do humans quickly reason about the physical world? We can judge whether it’s safe to cross a busy street, catch a fast-approaching ball - and if someone tells us it’s suddenly made of lava - instantly steer clear. Classic cognitive theories have argued that we either run an internal “physics engine” (a noisy mental simulation akin to PyBullet) or rely on fast, learned shortcuts and heuristics. Sosa et al., focus on a third option: showing people dynamically blend brief mental simulations with quick visual abstractions.

In this paper, the authors formalise a unified algorithm that runs a quick mental simulation in parallel with a linear-projection abstraction. At each moment, the brain compares the two predicted paths: if they agree closely enough, it accepts the cheaper shortcut; if not, it extends the simulation. This arbitration uses both the speed of heuristic reasoning and the accuracy of simulation when required. The paper describes two key experiments. Experiment 1 measured reaction-time savings, where participants judged whether a ball would land in a target after disappearing. Trials varied in the true trajectory’s length and in whether a straight-line cue was visible. Reaction times grew with trajectory length but were consistently faster on trials with an obvious straight path. Experiment 2 measured systematic errors, which scenes crafted to fool a straight-line heuristic. Results showed participants falling for the short-cut and recovering when the mismatch triggered deeper simulation, which supports the blended model.

Why do we care? The current state of the art models show poor performance on intuitive physical reasoning tasks, so understanding how people pull this off could point us toward new ways to build systems that reason about physics in real time - in a data and computation-efficient manner with robust generalisation.

Notable deals

Draig Therapeutics Launches with $140 Million (£107 Million) Total Investment to Advance its Portfolio of Next-Generation Therapies for Major Neuropsychiatric Disorders. Access Biotechnology led the Series A of Draig Therapeutics to fund four clinical studies, including two Phase II studies of their main treatment for Major Depressive Disorder. The work stems from work from John Atack and Simon Ward at Cardiff University who have been studying the glutamate and GABA pathways for decades. The company has already completed a Phase Ia with 60 participants, data for which was used to fuel the raise.

Kymera suffers Sanofi setback but secures $750M Gilead deal. As reported by Fierce Biotech, Gilead Sciences and Kymera Therapeutics have agreed to a deal worth up to $750 million, plus royalties, for an exclusive license to a CDK2-directed molecular glue degrader. This oncology drug is designed to remove the CDK2 protein from cells, which acts as a driver for tumor growth. The therapy aims to address resistance to existing CDK4/6 inhibitors in breast cancer, potentially with fewer side effects than traditional inhibitors.

ForSight Robotics raises $125m for cataract surgery robotic platform. ForSight Robotics bags $125M in a Series B to test out its robotic eye surgery platform for the treatment of cataracts. They’re tackling a market they expect to deepen, as demand for ophthalmologists expects to rise by 24% over the decade while the supply of practitioners decreases by 12%. More than two dozen ophthalmic surgeons have already tested out the Oryom system in animal models.

Royalty signs off on $2B to bankroll Revolution's RAS cancer drug's road to regulators. Royalty Pharma has agreed to help push Revolution Medicine’s pan-RAS inhibitor daraxonrasib through phase III studies and into the market. $1.25B of the money is guaranteed in exchange for a portion of net sales over the next 15 years. The other $750M is optionally accessible by Revolution, and is dependent on some milestones. The funding structures traditionally put forth by big pharmas generally are acquisitions or leave the original drug discoverer with a smaller double-digit portion of the drug. Royalty Pharma’s unique financing structure gives Revolution Medicines most of the profit if the drug does reach blockbuster status, while allowing Royalty Pharma to take a piece of that pie.

In case you missed it

Million Species Listing: Basecamp Research Unearths Trove of Sequence Data From Novel Species

Basecamp Research has launched BaseData, a massive new database containing 9.8 billion protein sequences from one million newly discovered species. This represents a 10-fold increase in known protein diversity compared to all public databases combined, addressing a significant data shortage in the field. The goal is to dramatically improve the performance of AI foundation models used for discovering and designing new proteins for therapeutics, sustainability, and other applications.

Diffuse Bio Launches DSG2-mini AI Model for Protein Binder Design

Diffuse Bio has launched a new AI model, DSG2-mini, to help researchers design custom protein binders with high precision. This tool is accessible through a user-friendly web platform called DiffuseSandbox, which does not require any coding expertise. The launch aims to make advanced protein design more accessible to the global research community, allowing for faster development in areas like therapeutics and diagnostics.

Sarepta Therapeutics’ AAV-based gene therapy for DMD has resulted in two deaths after FDA approval. A death during AAV clinical trials famously led to the decline of the gene therapy fieldin the early 2000s. The FDA is still assessing what action to take on regulation of the drug, which was approved in June 2024 for individuals over age 5 with the disease.

What we liked on socials channels

Field Trip

Did we miss anything? Would you like to contribute to Decoding Bio by writing a guest post? Drop us a note here or chat with us on Twitter: @decodingbio.